Goal of this post is a to set up a serverless infrastructure, managed in code, to serve predictions of a containerized machine learning model via Rest API as simple as:

$ curl \

$ -X POST \

$ --header "Content-Type: application/json" \

$ --data '{"sepal_length": 5.9, "sepal_width": 3, "petal_length": 5.1, "petal_width": 1.8}' \

$ https://my-endpoint-id.execute-api.eu-central-1.amazonaws.com/predict/

{"prediction": {"label": "virginica", "probability": 0.9997}}

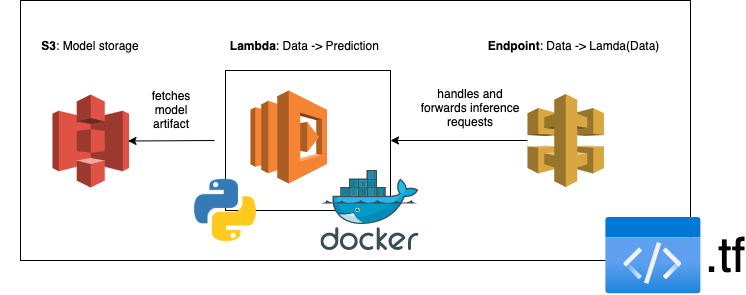

We will make use of Terraform to manage our infrastructure, including AWS ECR, S3, Lambda and API Gateway. We will make use of AWS Lambda to run the model code, in fact within a container, which is a very recent feature. We will use AWS API Gateway to serve the model via Rest API. The model artifact itself will live within S3. You can find the full code here.

Prerequisites 🔗

We use Terraform v0.14.0 and aws-cli/1.18.206 Python/3.7.9 Darwin/19.6.0 botocore/1.19.46.

We need authenticate to AWS to:

- set up the infrastructure using Terraform.

- train the model and store the resulting model artifact in S3

- test the infrastructure using the AWS CLI (here: )

The AWS credentials can be set up within a credentials file, i.e. ~/.aws/credentials, using a profile named lambda-model:

[lambda-model]

aws_access_key_id=...

aws_secret_access_key=...

region=eu-central-1

This will allow us to tell Terraform but also the AWS CLI which credentials to use. The Lambda function itself will authenticate using a role, and therefore no explicit credentials are needed.

Moreover we need to define the region, bucket name and some other variables, these are also defined within the Terraform variables as we will see later:

export AWS_REGION=$(aws --profile lambda-model configure get region)

export BUCKET_NAME="my-lambda-model-bucket"

export LAMBDA_FUNCTION_NAME="my-lambda-model-function"

export API_NAME="my-lambda-model-api"

export IMAGE_NAME="my-lambda-model"

export IMAGE_TAG="latest"

Creating a containerized model 🔗

Let us build a very simple containerized model on the iris dataset. We will define:

model.py: the actual model codeutils.py: utility functionstrain.py: a script to trigger model trainingtest.py: a script to generate predictions (for testing purposes)app.py: the Lambda handler

To store the model artifact and load data for model training we will define a few helper functions to communicate with S3 and load files with training data from public endpoints within a utils.py:

import boto3

import pickle

import pandas as pd

import os

# use aws profile if provided

PROFILE_NAME = os.environ.get("AWS_PROFILE", None)

session = boto3.Session(profile_name=PROFILE_NAME)

s3_client = session.client("s3")

def load_object(bucket_name, key, file):

"""Load object from S3 key (saving in file in between)."""

s3_client.download_file(bucket_name, key, file)

with open(file, "rb") as f:

object = pickle.load(f)

return object

def save_object(object, bucket_name, key, file):

"""Save object to S3 key (saving in file in between)."""

with open(file, "wb") as f:

pickle.dump(object, f)

s3_client.upload_file(file, bucket_name, key)

return

def load_data(url, feature_cols, target_col):

"""Load data from URL, splitting it into x and y."""

data = pd.read_csv(url, sep=",")

# normalize col names

data.columns = [col.replace(" ", "_").replace(".", "_").lower()

for col in data.columns]

y = data[target_col]

x = data[feature_cols]

return x, y

Furthermore we need a wrapper class for our model:

- to train it using external data

- to keep state and save and load the model artifact

- to pass payloads for inference

This will be defined in model.py:

import os

from typing import Tuple

from sklearn.impute import SimpleImputer

from sklearn.ensemble import GradientBoostingClassifier

from sklearn.pipeline import Pipeline

from utils import save_object, load_object, load_data

BUCKET_NAME = os.environ["BUCKET_NAME"]

MODEL_KEY = "model.pkl"

LOCAL_FILE_PATH = os.path.join("/tmp/", MODEL_KEY) # lambda writeable path

DATA_URL = "https://forge.scilab.org/index.php/p/rdataset/source/file/master/csv/datasets/iris.csv"

FEATURE_COLS = ["sepal_length", "sepal_width", "petal_length", "petal_width"]

TARGET_COL = "species"

def create_model():

"""Create simple model pipeline with imputation step and gradient boosting classifier."""

pipeline = Pipeline(steps=[

("simple_imputer", SimpleImputer()),

("model", GradientBoostingClassifier()),

])

return pipeline

class ModelWrapper:

def __init__(self, bucket_name: str = BUCKET_NAME):

self.model = None

self.bucket_name = bucket_name

def train(self, url: str = DATA_URL):

"""Train model on supplied data and save model artifact to S3."""

print("Loading data.")

x, y = load_data(url=url, feature_cols=FEATURE_COLS, target_col=TARGET_COL)

print("Creating model.")

self.model = create_model()

print(f"Fitting model with {len(x)} datapoints.")

self.model.fit(x, y)

self.save_model()

return

def predict(self, data: dict) -> Tuple[str, float]:

"""Compute predicted label and probability for provided data."""

if self.model is None:

self.load_model()

# sort cols and put into array

x = [[data[feature] for feature in FEATURE_COLS]]

label = self.model.predict(x)[0]

# extract probabilities

classes = self.model.named_steps['model'].classes_

probas = dict(zip(classes, self.model.predict_proba(x)[0]))

proba = probas[label]

return label, proba

def load_model(self):

"""Load model artifact from S3."""

print("Loading model.")

self.model = load_object(self.bucket_name, MODEL_KEY, LOCAL_FILE_PATH)

return

def save_model(self):

"""Save model artifact to S3."""

print("Saving model.")

save_object(self.model, self.bucket_name, MODEL_KEY, LOCAL_FILE_PATH)

return

To train and predict without the actual Lambda infrastructure we will also set up two scripts, a train.py and a predict.py. The training script can be very simple, we could also pass other data sources to the train method.

from model import ModelWrapper

model_wrapper = ModelWrapper()

model_wrapper.train()

And a simple predict.py that prints predictions to the console:

import json

import sys

from model import ModelWrapper

model_wrapper = ModelWrapper()

model_wrapper.load_model()

data = json.loads(sys.argv[1])

print(f"Data: {data}")

prediction = model_wrapper.predict(data=data)

print(f"Prediction: {prediction}")

Lastly, we need the handler to pass data to the model wrapper. This is what will be called by the Lambda function. We will keep it very minimalistic, the handler will simply pass the request to the wrapper and transform the returned predictions into the output format expected by API Gateway:

import json

import os

from model import ModelWrapper

BUCKET_NAME = os.environ["BUCKET_NAME"]

# load outside of handler for warm start

model_wrapper = ModelWrapper(bucket_name=BUCKET_NAME)

model_wrapper.load_model()

def handler(event, context):

print("Event received:", event)

data = event["body"]

if isinstance(data, str):

data = json.loads(data)

print("Data received:", data)

label, proba = model_wrapper.predict(data=data)

body = {

"prediction": {

"label": label,

"probability": round(proba, 4),

},

}

response = {

"statusCode": 200,

"body": json.dumps(body),

"isBase64Encoded": False,

# additional headers can be passed here..

}

return response

We will put all this into Docker (more specifically a Dockerfile) and make use of one of the AWS Lambda base images:

FROM public.ecr.aws/lambda/python:3.8

COPY requirements.txt .

RUN pip install -r requirements.txt

COPY app.py utils.py model.py train.py predict.py ./

CMD ["app.handler"]

Creating ECR and S3 resources 🔗

Let us now define ECR repository and S3 bucket via Terraform. The properly organized Terraform code can be found within the GitHub repo.

We define some config (variables and locals) and AWS as provider. Alternatively the variables could also be loaded from the environment.

variable aws_profile {

type = string

default = "lambda-model"

}

locals {

image_name = "my-lambda-model"

image_version = "latest"

bucket_name = "my-lambda-model-bucket"

lambda_function_name = "my-lambda-model-function"

api_name = "my-lambda-model-api"

api_path = "predict"

}

provider "aws" {

# region within profile

profile = var.aws_profile

}

Moreover S3 and ECR repository:

resource "aws_ecr_repository" "lambda_model_repository" {

name = local.image_name

image_tag_mutability = "MUTABLE"

}

resource "aws_s3_bucket" "lambda_model_bucket" {

bucket = local.bucket_name

acl = "private"

}

Let us create our S3 bucket and ECR repository:

(cd terraform && \

terraform apply \

-target=aws_ecr_repository.lambda_model_repository \

-target=aws_s3_bucket.lambda_model_bucket)

Building and pushing the docker image 🔗

We can now build our docker image and push it to the repo (alternatively this could be done in a null_resource provisioner in Terraform). We export registry ID to construct the image URI where we want to push the image:

export REGISTRY_ID=$(aws ecr \

--profile lambda-model \

describe-repositories \

--query 'repositories[?repositoryName == `'$IMAGE_NAME'`].registryId' \

--output text)

export IMAGE_URI=${REGISTRY_ID}.dkr.ecr.${AWS_REGION}.amazonaws.com/${IMAGE_NAME}

# ecr login

$(aws --profile lambda-model \

ecr get-login \

--region $AWS_REGION \

--registry-ids $REGISTRY_ID \

--no-include-email)

Now building and pushing is as easy as:

(cd app && \

docker build -t $IMAGE_URI . && \

docker push $IMAGE_URI:$IMAGE_TAG)

Training the model 🔗

Let us train our model now using the train.py entry point of our newly created docker container:

docker run \

-v ~/.aws:/root/.aws \

-e AWS_PROFILE=lambda-model \

-e BUCKET_NAME=$BUCKET_NAME \

--entrypoint=python \

$IMAGE_URI:$IMAGE_TAG \

train.py

# Loading data.

# Creating model.

# Fitting model with 150 datapoints.

# Saving model.

Testing the model 🔗

Using the predict.py entry point we can also test it with some data:

docker run \

-v ~/.aws:/root/.aws \

-e AWS_PROFILE=lambda-model \

-e BUCKET_NAME=$BUCKET_NAME \

--entrypoint=python \

$IMAGE_URI:$IMAGE_TAG \

predict.py \

'{"sepal_length": 5.1, "sepal_width": 3.5, "petal_length": 1.4, "petal_width": 0.2}'

# Loading model.

# Data: {'sepal_length': 5.1, 'sepal_width': 3.5, 'petal_length': 1.4, 'petal_width': 0.2}

# Prediction: ('setosa', 0.9999555689374946)

Planning our main infrastructure with Terraform 🔗

We can plan the inference part of the infrastructure now, the Lambda & API Gateway setup:

- the Lambda function, including a role and policy to access S3 and produce logs,

- the API Gateway, including the necessary permissions and settings.

resource "aws_lambda_function" "lambda_model_function" {

function_name = local.lambda_function_name

role = aws_iam_role.lambda_model_role.arn

# tag is required, otherwise a "source image ... is not valid" error will pop up

image_uri = "${aws_ecr_repository.lambda_model_repository.repository_url}:${local.image_version}"

package_type = "Image"

# we can check the memory usage in the lambda dashboard, sklearn is a bit memory hungry..

memory_size = 512

environment {

variables = {

BUCKET_NAME = local.bucket_name

}

}

}

resource "aws_iam_role" "lambda_model_role" {

name = "my-lambda-model-role"

assume_role_policy = <<EOF

{

"Version": "2012-10-17",

"Statement": [{

"Action": "sts:AssumeRole",

"Principal": {

"Service": "lambda.amazonaws.com"

},

"Effect": "Allow",

"Sid": ""

}]

}

EOF

}

resource "aws_iam_role_policy_attachment" "lambda_model_policy_attachement" {

role = aws_iam_role.lambda_model_role.name

policy_arn = aws_iam_policy.lambda_model_policy.arn

}

resource "aws_iam_policy" "lambda_model_policy" {

name = "my-lambda-model-policy"

policy = <<EOF

{

"Version": "2012-10-17",

"Statement": [{

"Effect": "Allow",

"Action": [

"logs:*"

],

"Resource": "arn:aws:logs:*:*:*"

},

{

"Effect": "Allow",

"Action": [

"s3:*"

],

"Resource": "arn:aws:s3:::${local.bucket_name}/*"

}

]

}

EOF

}

resource "aws_api_gateway_rest_api" "lambda_model_api" {

name = local.api_name

}

resource "aws_api_gateway_resource" "lambda_model_gateway" {

rest_api_id = aws_api_gateway_rest_api.lambda_model_api.id

parent_id = aws_api_gateway_rest_api.lambda_model_api.root_resource_id

path_part = "{proxy+}"

}

resource "aws_api_gateway_method" "lambda_model_proxy" {

rest_api_id = aws_api_gateway_rest_api.lambda_model_api.id

resource_id = aws_api_gateway_resource.lambda_model_gateway.id

http_method = "POST"

authorization = "NONE"

}

resource "aws_api_gateway_integration" "lambda_model_integration" {

rest_api_id = aws_api_gateway_rest_api.lambda_model_api.id

resource_id = aws_api_gateway_method.lambda_model_proxy.resource_id

http_method = aws_api_gateway_method.lambda_model_proxy.http_method

integration_http_method = "POST"

type = "AWS_PROXY"

uri = aws_lambda_function.lambda_model_function.invoke_arn

}

resource "aws_api_gateway_method" "lambda_model_method" {

rest_api_id = aws_api_gateway_rest_api.lambda_model_api.id

resource_id = aws_api_gateway_rest_api.lambda_model_api.root_resource_id

http_method = "POST"

authorization = "NONE"

}

resource "aws_api_gateway_integration" "lambda_root" {

rest_api_id = aws_api_gateway_rest_api.lambda_model_api.id

resource_id = aws_api_gateway_method.lambda_model_method.resource_id

http_method = aws_api_gateway_method.lambda_model_method.http_method

integration_http_method = "POST"

type = "AWS_PROXY"

uri = aws_lambda_function.lambda_model_function.invoke_arn

}

resource "aws_api_gateway_deployment" "lambda_model_deployment" {

depends_on = [

aws_api_gateway_integration.lambda_model_integration,

aws_api_gateway_integration.lambda_root,

]

rest_api_id = aws_api_gateway_rest_api.lambda_model_api.id

stage_name = local.api_path

# added to stream changes

stage_description = "deployed at ${timestamp()}"

lifecycle {

create_before_destroy = true

}

}

resource "aws_lambda_permission" "lambda_model_permission" {

statement_id = "AllowAPIGatewayInvoke"

action = "lambda:InvokeFunction"

function_name = aws_lambda_function.lambda_model_function.function_name

principal = "apigateway.amazonaws.com"

# The "/*/*" portion grants access from any method on any resource

# within the API Gateway REST API.

source_arn = "${aws_api_gateway_rest_api.lambda_model_api.execution_arn}/*/*"

}

output "endpoint_url" {

value = aws_api_gateway_deployment.lambda_model_deployment.invoke_url

}

We can now apply this using the Terraform CLI again, which will take around a minute.

(cd terraform && terraform apply)

Testing the infrastructure 🔗

To test the Lambda function we can invoke it using the AWS CLI and store the response to response.json:

aws --profile lambda-model \

lambda \

invoke \

--function-name $LAMBDA_FUNCTION_NAME \

--payload '{"body": {"sepal_length": 5.9, "sepal_width": 3, "petal_length": 5.1, "petal_width": 1.8}}' \

response.json

# {

# "StatusCode": 200,

# "ExecutedVersion": "$LATEST"

# }

The response.json will look like this:

{

"statusCode": 200,

"body": "{\"prediction\": {\"label\": \"virginica\", \"probability\": 0.9997}}",

"isBase64Encoded": false

}

And we can also test our API using curl or python. We need to find out our endpoint URL first, for example again by using the AWS CLI or alternatively the Terraform output prints.

export ENDPOINT_ID=$(aws \

--profile lambda-model \

apigateway \

get-rest-apis \

--query 'items[?name == `'$API_NAME'`].id' \

--output text)

export ENDPOINT_URL=https://${ENDPOINT_ID}.execute-api.${AWS_REGION}.amazonaws.com/predict

curl \

-X POST \

--header "Content-Type: application/json" \

--data '{"sepal_length": 5.9, "sepal_width": 3, "petal_length": 5.1, "petal_width": 1.8}' \

$ENDPOINT_URL

# {"prediction": {"label": "virginica", "probability": 0.9997}}

Alternatively we can send POST requests with python:

import requests

import os

endpoint_url = os.environ['ENDPOINT_URL']

data = {"sepal_length": 5.9, "sepal_width": 3, "petal_length": 5.1, "petal_width": 1.8}

req = requests.post(endpoint_url, json=data)

req.json()

More remarks 🔗

To update the container image we can use the CLI again:

aws --profile lambda-model \

lambda \

update-function-code \

--function-name $LAMBDA_FUNCTION_NAME \

--image-uri $IMAGE_URI:$IMAGE_TAG

If we want to remove our infrastructure we have to empty our bucket first, after which we can destroy our resources:

aws s3 --profile lambda-model rm s3://${BUCKET_NAME}/model.pkl

(cd terraform && terraform destroy)

Conclusion 🔗

With the new feature of containerized Lambdas it became even more easy to deploy machine learning models into the AWS serverless landscape. There are many AWS alternatives to this (ECS, Fargate, Sagemaker), but Lambda comes with many tools out of the box, for example request based logging and monitoring, and it allows fast prototyping with ease. Nevertheless it also has some downsides, for example the request latency overhead and the usage of somewhat proprietary cloud service which is not fully customizable.

Another advantage is that containerization allows us to isolate the machine learning code and properly maintain package dependencies. If we keep the handler code to a minimum we can test the image carefully and make sure our development environment is very close to the production infrastructure. At the same time we are not locked into AWS technology - we can very easily replace the handler with our own web framework and deploy it to Kubernetes.

Lastly, we can potentially improve our model infrastructure by running the training remotely (for example using ECS), adding versioning and CloudWatch alerts. If needed, we could add a process to keep the Lambda warm, since a cold start takes a few seconds.